Posts

Last edited: Jul 29, 2015, 1:37pm -0600

hackthe.computer 2015!

This article was originally posted on Space Monkey’s blog.

On Monday, we wrapped up the final round of the 2015 hackthe.computer coding competition. We had 972 people sign in to the coding competition with Github accounts, 121 people push solution attempts to our system, and 92 people actually score points and make it to the leaderboard. Over the week we had over 9000 unique pushes of code. For the final round we invited 12 of the top competitors from the online round to our offices in Lehi, Utah, and they had three hours (minus how much time I spent talking) to write an AI for a little tank game we made. We put up $20,000 in prizes.

Why did we do this? Many companies run coding competitions, and a large part of the reason is for fun. Many programmers get into the field simply for the love of solving problems, and to that end we tried to make a bunch of challenging and engaging problems. But coding competitions aren’t all selfless - our company is looking to recruit, and what a better way to find high quality engineers than to create a fun competition. We’re looking for people who want to and enjoy solving some of the toughest problems, so if you think these sorts of problems are something you’d like to work on for your day job, please send us an email (jobs@spacemonkey.com)! Many of these problems are surprisingly representative of the problems we have to solve every day at Space Monkey.

The competition happened in two stages - the online round ran from 9am on July 20th until 2pm on July 27th. The final round started at 5pm on the 27th and happened in person and in our offices.

Let’s talk about our awesome final round first.

Final round

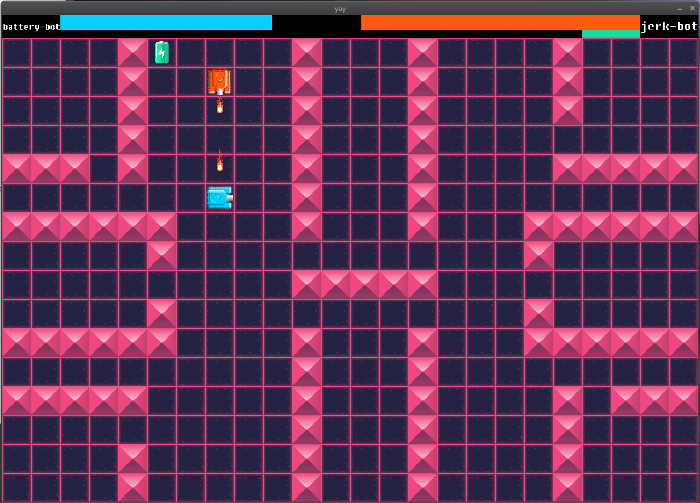

Our final round was so cool. We actually didn’t start development on it until the Wednesday or Thursday during the competition. We taught ourselves game development and we didn’t do too shabby. Here’s a screenshot:

The documentation for this game is here: https://github.com/SpaceMonkeyInc/htc2015/tree/master/final/final/docs.md

The game is a Go program, and the source for it is here: https://github.com/SpaceMonkeyInc/htc2015/tree/master/final/

You’ll first have to create the assets file (Makefile in

final/src/sm/final/assets/), possibly after redownloading the sound files

(listed in final/final/sounds/CREDITS), then you can build everything with

GOPATH=/path/to/htc2015/final go install sm/...

The competition was fierce, but ultimately luk, chc, and sfw came out

on top with 1st, 2nd, and 3rd place!

We took a short clip of the competition as it ran: https://mnky.es/f/LnRejdA6OaEIa_ZUhH767w/2015-07-27%2020.17.04.mp4

Online round problems

We posted 10 problems to the online round. Entrants had to read the problem

description, pull down a git repository from the special git server we

built, write a program to solve the problem, then push it back up to our

special git server. Once landed on our server, we would kick off compiling

their code (if applicable) inside of a restricted execution jail, then run

their code against a series of tests we wrote. Each set of tests was designed

to illuminate a particular aspect of the problem, to determine if the

contestant thought about that aspect and how they handled it.

Many of these programming contests have deterministic tests, and so therefore

can’t provide back to the contestant much debugging output without risking

leaking what the test was. Our competition was no different, so we made the

following concessions. During the compilation phase, the contestant could

receive back stdout and stderr, and would not be penalized for compilation

time (though limited). The compilation phase let the contestant run whatever

they wanted inside of our execution jail. Then the server would move on to

example test phase. In example test phase, the contestant’s program was given

the example test in the problem description, and was tested to make sure they

provided the expected output. Since this was a test case that everyone already

had, we rerouted stderr back to the contestant during this phase (stdout

was in use for the test itself). Finally, if all of that went well, the server

would then move to test mode, and stderr would also be shut off.

The jail technology was enabled thanks to recent Linux kernel namespacing extensions that we kicked off using the recently released nsjail project. This allowed us to shut off disk writes, network access, limit memory, cpu time, wallclock time, etc, in a very convenient manner. This did nearly everything we wanted, except we struggled to get OpenJDK or Oracle JDK to run inside the jail. It’s most certainly possible to do, but with the limited time we had we cut our losses, said goodbye to Clojure, Java > 1.6, and Scala, and simply installed GCJ (and thus Java 1.5). We definitely apologize to those who wanted to compete with more recent JVM technologies.

That said, we were able to successfully install and run recent versions of the GCC toolchain (GCC/G++/GFortran/GCJ/etc), GHC, Go, Mono, Node, Python, Bash, Elixir, Erlang, GDC, Julia, Lua, Perl, Racket, Ruby, and Rust. We didn’t install many libraries and relied on constestants to check in the libraries that they needed.

Even with how well our jail setup was working, we still didn’t trust it completely, so compilation and execution happened in EC2 on boxes in a VPC that had no outgoing route to the internet, firewalled such that no outgoing connections were allowed. Essentially we black-holed the executors so that even if they were compromised, they couldn’t do anything unless connected to from one of our trusted computers.

git pushes were restricted in their frequency and size. For all of our git

access we used an SSH library written in Go to communicate with contestants.

We intercepted some of the git protocol over SSH to add our own tags to

git repository references as they came in. Some of what we used is available

as open source in this project.

Contestants were scored based on the total number of tests passed across all problems, breaking ties by the amount of time their program took to run a set of tests.

You can find the full list of problems and the test cases they ran with at https://github.com/SpaceMonkeyInc/htc2015.

Problem: Bowie’s in a maze

You can read the actual problem description and see the leaderboard for this problem here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/maze1/README.md

This problem was primarily intended to be a fun entry-level problem with a twist. Like many of the other problems, the test cases were designed to be mostly passable with a naive solution, and at least one test case would be held off to try and force the contestant to solve the problem with the most efficient possible solution. This problem was no different - two of the three test cases should have been passable with simple A* search, but one test case attempted to encourage contestants to implement Jump point search, or another algorithm that does well with wide-open spaces.

Of course, one interesting problem requirement we made is that there was exactly one correct solution, which added a number of constraints to our test cases, and potentially added new avenues for optimization.

The competition’s test cases for this problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/tree/master/maze1

Problem: The NSA needs friends

This problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/nsa1/README.md

We only gave this problem two points, since this problem (assuming you found the right Wikipedia page) may have been one of the most straightforward. Unfortunately, finding the right Wikipedia page was not a strategy all contestants opted for, and so these two points ended up being challenging for many.

This problem is solveable directly using the Bron-Kerbosch algorithm used for finding maximal cliques.

The test cases for this problem were computer generated, but can be found here: https://github.com/SpaceMonkeyInc/htc2015/tree/master/nsa1

An example solution is here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/nsa1/solutions/soln.py

Problem: The NSA needs more friends

This problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/nsa2/README.md

This problem had the usual three points since it was a little more complicated of a problem than the previous NSA-themed problem. It was similar to the previous NSA problem in the sense that it was intended to be a direct test of the contestant’s ability to find the appropriate Wikipedia page.

This problem is solveable directly using a Strongly connected component detection algorithm. There’s a few choices, but one of the most straightforward to implement is Tarjan’s strongly connected components algorithm.

The test cases for this problem were also computer generated, but can be found here: https://github.com/SpaceMonkeyInc/htc2015/tree/master/nsa2

An example solution is here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/nsa2/solutions/soln.py

Problem: Don’t be a Kad

This problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/kad/README.md

This is the first of a few problems that are directly related to the work we do at Space Monkey. It’s called “Don’t be a Kad” because the problem of efficiently answering XOR-nearest queries is something you have to solve when building a Kademlia DHT.

At Space Monkey, we’ve built a large DHT based on Kademlia on low-power devices and doing efficient XOR-nearest lookups is key to the DHT’s success.

One interesting property of an XOR metric on a fixed-length keyspace is that it

confers a tree structure on the keyspace. The first level of the tree branches

on the first bit, the second level of the tree branches on the second bit, and

so on. XOR-near values to a given query value will not have to travel around

on the tree structure very far to find their k-nearest neighbors.

At Space Monkey, we’ve come up with an algorithm based heavily on the algorithm

the Kademlia paper

suggests that perhaps isn’t novel, but works well for us. When preparing a

database for k-nearest XOR lookups, we maintain a tree of buckets where each

bucket has at most k values. Each bucket has a specific shared bit prefix,

and buckets are organized on a binary tree for fast lookup of that bucket given

a prefix. To find the nearest n values to a value v, we find the bucket

that v would belong in, thus getting up to k nearest values to v. If

that’s n or more, then we sort by XOR distance and we’re done. If it’s less

than n, then we compute the XOR-farthest possible value from k that could

be in the bucket that shares a prefix with k (which happens to be the value

that has the same bit prefix as the bucket bit prefix, and then has the

remaining bits as the inverse of bits of v). Then we add one to the distance

of that farthest possible value, then find the bucket that would contain the

key at that distance. This works because there is exactly one point that is a

specific XOR-distance away from any other point. We continue in this manner

until we’ve found n values or we’ve exhausted all buckets.

I admit this is a rushed description, but it hopefully gives you a flavor of one way to solve this type of problem in a way that’s different than just sorting the database of values by distance each time. Most test cases could be solved by a naive solution (something akin to sorting by distance for each query), but one test case in particular required efficiently answering a large amount of lookups.

The test cases for this problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/tree/master/kad

Problem: nbd-lang

This problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/nbd/README.md

This problem was challenging, but ultimately straightforward. Contestants simply had to write a small programming language interpreter. The challenges here involved understanding the rules the example program indicated about variable scoping, whether or not the language handled negative numbers (even if the grammar didn’t support a programmer entering them directly), and whether or not the program handled arbitrarily large integers, which the grammar did not restrict.

If a contestant’s interpreter could execute our sample programs and provide the expected output, the contestant passed.

We gave this problem a slightly larger than usual 4 points.

The test cases for this problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/tree/master/nbd

Problem: Fencing

This problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/fencing/README.md

This problem was attempting to measure two things really.

The first was how closely you could follow the SVG spec for path data. A careful reading of section 8.3.9’s grammar for path data was required to determine all the possible delimeters your parser might run into. It wasn’t enough to just be able to solve the problem algorithmically, you had to actually read the specification documentation and parse weird but ultimately correct path descriptions.

The second was actually solving the problem algorithmically. There’s a number of possible algorithms to detect whether or not a point is in a polygon, but one particularly effective way is the ray tracing method. Unfortunately, many of these methods don’t help you determine if a point is on the perimeter, which must be solved separately. You can iterate over all the edges of the polygon to determine if a point is on the line. The first Google hit for how to actually make such a determination is this Stack Overflow question, which, as it happens, works perfectly.

The test cases did a great job of exercising weird parser edge cases and also in checking the performance of submitted solutions.

The test cases for this problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/tree/master/fencing

Problem: Recover the Source

This problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/recover/README.md

We got a ton of traffic for this problem, as it teaches contestants how an erasure code works, which is a particularly neat mathematical trick with broad applicability for data storage and error correction. This is yet another problem with direct applicability to Space Monkey’s core set of challenges.

We spent a lot of time on this problem trying our best to make the math approachable. With many programmers, even those with some college-level math, if you say something like “Lagrangian” they panic and their math-brain shuts down, so we did our best to make an actually relatively simple topic as simple as possible.

This problem didn’t really have much of a trick to it other than that you could get full precision if you did all of your calculations with a rational number library. Go’s math/big.Rat comes to mind.

The test cases for this problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/recover

Problem: Contact!

This problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/contact/README.md

I don’t think any problem caused us as much schadenfreude as this problem, though I also think this problem was one of the most fun. This problem’s main goal was an attempt to measure spatial reasoning skills.

There wasn’t really any specific algorithm, trick, or optimization with this problem. You basically got full credit if you could solve it at all. Essentially if you could wrap your head around the rotations and mappings you’d need to do to fold and unfold cubes in a myriad of ways, you could pass this problem.

The test cases for this problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/contact

Problem: Utah pentominoes

This problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/utah/README.md

This problem was another spatial reasoning test, albeit a bit simpler and more game oriented. We initially considered having this problem test full Minimax-style solving, but concluded that might be too hard to fairly test and judge in a way amenable to easy debugging. So instead, the problem was restricted to last move decisions.

The test cases for this problem exercised a few different things - whether or not you could handle when the opponent was on the verge of victory and you weren’t, whether or not you were on the verge of victory and your opponent wasn’t, whether or not you were both on the verge of victory, whether or not your program puked if there were multiple Utah-shaped pentominoes you could complete with the same move, and a few other things.

The test cases for this problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/utah

Problem: Diff those Hellmen

This problem can be found here: https://github.com/SpaceMonkeyInc/htc2015/blob/master/dh/README.md

I think this problem was one of our more interesting problems, partially because it broke out of the fixed input/fixed output model. The goal for this problem was to simultaneously teach contestants Diffie-Hellman key exchange if they didn’t already know it, and also ascertain contestants ability to use cryptographic constructions such as AES-GCM, which we mistakenly thought might be the easiest construction to explain and learn.

The Diffie-Hellman part worked out well. Diffie-Hellman key exchange is a critical underpinning of forward-secrecy and a number of other cryptographic patterns. It’s also deceptively simple to implement and understand, and many entrants found this.

The AES-GCM part (and our contrived protocol) on the other hand, was much more finicky and hard to get right. In fact, in even describing the problem I take complete blame in making the key be a SHA-256 hash of a 257-byte long string (sorry!). Making sure the auth tag and the IV/nonce and all the pieces lined up correctly wasn’t straightforward, even despite the tooling we wrote into this problem so that entrants could more easily debug their submissions when in example test mode.

The test cases in this case are actual programs that know how to do a

Diffie-Hellman handshake, and can be found inside this GOPATH here:

https://github.com/SpaceMonkeyInc/htc2015/tree/master/dh/solutions/src/sm/validators

Results

It was an awesome competition; we certainly had a lot of fun running it, and we’d love to do it again.

Once again, a big congratulations to jps, LOL, PDD, luk, rya, chc,

CRG, cxu, jon, and sfw, with a special congratulations to the final

round winners luk, chc, and sfw!